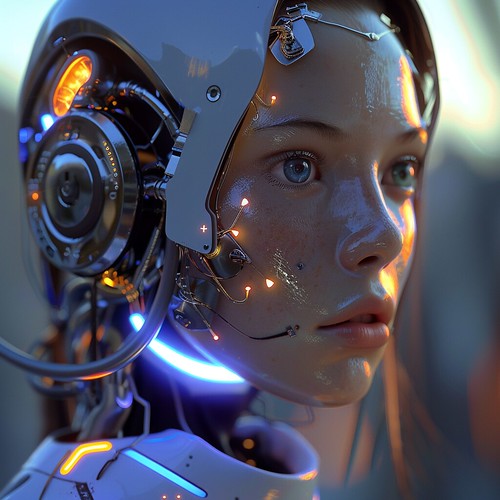

Initially focused on physical tasks, robots now possess the ability to comprehend and respond to human speech. Last month, FigureAI, a robotics startup, unveiled a collaboration with OpenAI aimed at crafting a specialized AI model for robots. The recent developments, shared yesterday, have astounded the AI community.

What’s the latest? In a demonstration showcased yesterday, a robot dubbed Figure 01 showcased its capabilities by executing various tasks solely based on verbal commands:

- The robot adeptly identifies and describes objects in its vicinity.

- It seamlessly hands an apple to a human upon request for a snack.

- While sorting trash into a basket, it provides an explanation for its actions after handing the apple to the human.

- Upon inquiry about dish placement, the robot efficiently arranges dishes in a rack, demonstrating gentle handling of objects and conversing naturally when prompted with questions.

This marks a significant advancement in reasoning abilities. Figure emphasizes that the robot’s actions are not pre-planned but rather a result of its capacity to learn new movements and skills progressively. Nonetheless, there are observable delays and occasional pauses as the robot formulates responses.

What’s next? Recent AI breakthroughs have propelled rapid progress in robotics. Companies like Tesla and Hanson Robotics are also actively developing their own iterations of this technology. Commercially available versions of these robots may soon become a reality, signaling a transformative era in robotics.

You can watch the full demo video of Figure01 here.

Recent Comments